MODULE 2A: BASIC ACOUSTICS

Vibrations - Signals - Spectra - Envelopes - Waves

|

Fundamentals of Sound MODULE 2A: BASIC ACOUSTICS Vibrations - Signals - Spectra - Envelopes - Waves |

|

|

|

|

Physical attributes of acoustic waves - Part I: Introduction Vibrations, Signals, Signal Envelopes, Frequency/Period, Amplitude, Phase Logarithmic Scales Root-Mean-Square Amplitude Linear Superposition & Interference |

| Physical attributes of acoustic

waves - Part II Sound Waves - Inverse Square Law Standing Waves Fourier Analysis/Synthesis - Spectrum & Spectral Envelope - Modulation |

Physical attributes of acoustic waves - Part I: Introduction

For an alternative self-study resource see

Sound Waves (University of Salford)

|

|

Oscillation: A broad

term, describing the variation (back-and-forth) of any physical or other property of

a system around a reference value.

|

|

Vibration: The back-and-forth motion

of a mass around a point of reference, called point of rest or equilibrium.

Vibration is a mechanical phenomenon, a special case of oscillation.

Vibrations vs. Waves: In most cases: |

|

|

|

Simple Harmonic Motion:

the simplest type of vibration; for example, a free pendular motion. See

the animation to the right (top).

|

|

| Optional: The video,

below, explains in detail the meaning and derivation of the sinusoidal equation (from Khan Academy's high-school AP physics resources). |

|

Complex vibration/wave: a vibration

in which the restoring force is related to displacement in a complex way.

|

|

|

(Acoustical) Signal: A two-dimensional graphic

representation of a vibration/wave, plotting _ y or vertical axis: displacement (move away from equilibrium) of some mass or a number of other concepts/measures (e.g. velocity, pressure) over _ x or horizontal axis: time.

|

|

|

|

The signal, above,

with its regular, sinusoidal peaks and valleys, represents a

simple/sine/pure wave, corresponding in frequency to the note A3 (in equally

tempered tuning).

|

The signal, above, also represents

a sine wave. This one corresponding in frequency to the note A4.

|

|

The

Amplitude of both signals is represented

by the distance between the top (or bottom) peak and the central horizontal

line, representing the point of rest (equilibrium). |

|

|

The Signal Envelope is a boundary curve that traces the signal's amplitude through time and,

consequently, captures how energy in the signal changes with time. It

encloses the area outlined by all maxima of the motion represented by the

two-dimensional signal. It includes points that do not belong to the signal

(in the images: signal is in blue, envelope is in

red).

Signal envelopes and spectral envelopes

(described under "Spectrum")

are the two features that audio engineers are most likely to manipulate. We will be

devoting substantial time to the exploration of their physical and perceptual

correlates. |

|

|

The attack stage captures how energy rises in a signal from 0 to its

maximum level.

The decay portion captures how vibration energy in a system settles, following initial excitation and assuming continuous excitation.

The sustain stage captures how a sound source responds during continuous excitation.

The release stage captures how energy in a system naturally dies out following the end of energy supply.

Impulse signals: signals resulting from the instantaneous (very short)

excitation of a sound source, which is then left to vibrate on its own

until the energy dies out (due to some form of friction/resistance). |

|

Periodic vibration/wave: A vibration/wave

that repeats itself at regular time intervals.

|

|

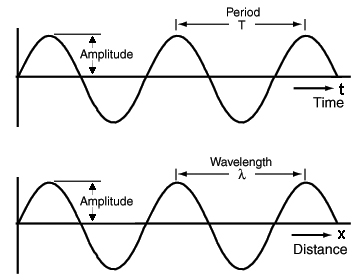

Frequency (f): Number of repetitions per unit time. Changes in frequency correspond primarily to changes in pitch. |

|

Amplitude (A): Maximum displacement ( of mass, velocity, pressure, etc.) away from the point of rest. Changes in amplitude correspond primarily to changes in loudness. |

|

Phase: Position on the vibration cycle at a given moment in time.

|

In contrast, changes in phase relationships among multiple simultaneous signals, that are identical or close in frequency, are rather salient and correspond to loudness, timbre, pitch, and perceived source-location changes, to be described later in the course. Click on the image, below, for an example of the influence of phase-shift on timbre (i.e. sound quality). |

|

|

Examples of Sound Levels and Human Response From the Noise Pollution Clearinghouse (http://www.nonoise.org) |

|||

| Common sounds | Noise Level [dB] | Musical Dynamics | Effect |

|

Rocket launching pad |

180 |

Irreversible hearing loss |

|

|

Carrier deck jet operation |

140 |

Painfully loud |

|

|

Thunderclap |

130 |

|

|

|

Jet takeoff (200 ft) |

120 |

Maximum vocal effort |

|

|

Pile driver |

110 |

Extremely loud |

|

|

Garbage truck |

100 |

fff |

Very loud |

|

Heavy truck (50 ft) |

90 |

ff |

Very annoying |

|

Alarm clock (2 ft) |

80 |

f |

Annoying |

|

Noisy restaurant |

70 |

mf |

Telephone use difficult |

|

Air conditioning unit |

60 |

mp |

Intrusive |

|

Light auto traffic (100 ft) |

50 |

p |

Quiet |

|

Living room |

40 |

pp |

|

|

Library |

30 |

ppp |

Very quiet |

|

Broadcasting studio |

20 |

|

|

|

|

10 |

Just audible |

|

|

|

0 |

Hearing begins |

|

|

See also an additional

table of commonly encountered sound levels.

|

|||

The amount of energy in a signal can be measured in terms of |

|

|

|

|

|

|

Figure (a) 0-to-peak,

peak-to-peak, and RMS amplitudes of a sine signal Figure (b) RMS amplitude is a measure of the area outlined by the signal (highlighted). |

|

|

Application Example:

The above issues will be addressed during our "Loudness" module and are directly relevant to anyone planning to enter the sound-for-picture business. ; |

|

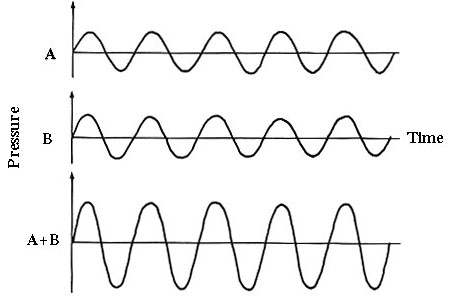

The linear superposition principle states that,

at any given moment in time, the

total displacement of two superimposed vibrations (waves) is equal to the

algebraic sum (i.e. a sum that takes into consideration signs: +,

-) of the

displacements

of the original vibrations (waves).

|

The interference principle (see the animation, below) is an extension of the linear superposition principle. It states that, when two or more vibrations (waves) interact, their combined amplitude may be larger (constructive interference) or smaller (destructive interference) than the amplitude of the individual vibrations (waves), depending on their phase relationship.

|

|

|

|

|

|

Interactive

tool exploring interference between two sines with different

frequencies |

|

|

|

|

|

|

Physical attributes of acoustic waves - Part II

Sound waves - Inverse Square Law

|

Wave: Transfer of vibration energy across a

medium (e.g. air, string, etc.).

|

|

|

Wavelength (λ): The distance a wave travels during

the time it takes to complete one vibration cycle.

|

(click here for a wavelength calculator) |

|

Speed of sound in air:

c = 345m/s at 210C

or 1130ft/s

at 700F a) reduce its density, if the air is unconstrained and free to expand (e.g. outdoors), or b) increase its stiffness, if the air is constrained and cannot expand (e.g. indoors).

|

|

|

Transverse waves:

waves propagating perpendicularly to the motion of the

vibration |

|

|

|

|

|

|

|

Longitudinal waves:

waves propagating parallel to the motion of the vibration

|

|

|

|

|

|

|

Images sources:

https://waitbutwhy.com/2016/03/sound.html -

https://www.acs.psu.edu/drussell/demos/waves/wavemotion.html

|

|

|

|

|

|

|

Inverse Square Law |

|

|

|

| ||||

Interactive tool exploring standing waves (The Physics Classroom) |

Interference of sound waves from sources separated by some distance |

|

|

|

[source] |

|

Fourier Analysis/Synthesis - Spectrum & Spectral Envelope - Modulation

|

|

|

|

|

|

|

|

|

|

Ideal signal-forms (also called 'waveforms') and spectra,

Key: A: amplitude of the first component; n: component number

(a) An ideal sawtooth signal (far right) is formed by summing an infinite number of all harmonic components,

with amplitudes A/n and 1800 phase-shift between odd- and even-numbered components, illustrated on the spectrum (right).The blue points marking the amplitudes of the even components are on the opposite side of the blue points marking the amplitude of the odd components, to illustrate the described phase shift.

(b) An ideal triangular signal (far right) is formed by summing an infinite number of only odd-numbered harmonic components,

with amplitudes A/n2 and 1800 phase-shift between successive odd components, illustrated on the spectrum (right).

(c) An ideal square signal (far right) is formed by summing an infinite number of only odd-numbered harmonic components,

with amplitudes A/n and in-phase with one another, illustrated on the spectrum (right).

First 6 harmonic components of

a sawtooth signal analogous to those generated by strings

Pitch/note values of each component of the signal to the left

(assuming fundamental = 256Hz) &

corresponding standing waves on a string

Experiment, again, with this Fourier synthesis java applet

[Optional: detailed discussion of spectra]

Fourier analysis drawbacks

Spectra arising from Fourier analysis are, unfortunately, never as clean and precise as the ones in the above figures.

The frequency values returned from the analysis are frequency ranges/bands, not single frequency values. In the figures, below, compare the spectral lines (left) vs. bands (right).There is a time/frequency trade-off in that:

a) the more precisely we want to know how the energy of a spectrum changes with time, the broader will be the frequency bands returned by the analysis and the less precisely the frequency values will be represented and

b) the more precisely we want to know the frequencies in a spectrum, the longer the signal chunks analyzed will have to be and the less precisely the level changes of a sound over time will be captured.In other words, we can only increase the frequency resolution of the analysis at the expense of the time resolution, and the converse.

This trade-off is related to Heisenberg's uncertainty principle, (after 20th century German physicist, Werner Heisenberg). Oversimplified, it states that the more precisely we determine the position of a particle the less precisely we may know its momentum (momentum = mass*velocity), and the converse.In addition, there is a series of assumptions accompanying the standard mathematical implementation of Fourier analysis:

(a) the signal analyzed has infinite duration and

(b) all the energy within a frequency band, returned in the analysis, lays at the band's upper end.

Violation of these assumptions results in spectral "smearing." (i.e. spectral components that are artifacts of the analysis process, surrounding the actual spectral components of a signal - see below-right).

Frequency and amplitude values of the spectral components

used to synthesize the complex signal to the right.Complex signal synthesized by adding the two sinusoidal signals

described by the spectrum to the left.Spectrum resulting from Fourier analysis of 17ms-long portions

of the same signal.

Temporal resolution: 17ms or 0.017s

Frequency resolution: 1/0.017 =~ 59Hz.

Amplitude and Frequency Modulation

In broadcast technology, the terms Amplitude Modulation (AM) and Frequency Modulation (FM) describe two specific signal processing techniques used in the wireless transmission of audio signals.

In simple terms, audio signals can be transmitted over large distances more robustly and with less contamination if they are "carried" by higher frequency signals, which

a) have more energy (energy is proportional to the square of frequency), and

b) can be transmitted/received by smaller antennas than those required

for lower frequencies

(the higher the frequency the shorter the wavelength).So, rather than being transmitted directly, the intended signal is transmitted in the form of a modulation (i.e. modification) of a high-frequency carrier signal.

Most common application: AM and FM Radio.

High-frequency sinusoidal carrier (not shown) modified by a low-frequency signal (black)

through

a) amplitude modulation (producing the signal in red) or

b) frequency modulation (producing the signal in blue).

In its simplest case:i) Sinusoidal Amplitude Modulation refers to the sinusoidal variation of a carrier signal's amplitude, at a rate equal to the modulating signal's frequency and at a degree (or depth) equal to the modulating signal's amplitude.

The analogous musical term is "Tremolo," describing the sensation of slow loudness fluctuations, similar to the sensation associated with interference and "beating."

ii) Sinusoidal Frequency Modulation refers to the sinusoidal variation of a carrier signal's frequency, at a rate equal to the modulating signal's frequency and degree equal to some proportion of the modulating signal's frequency, depending on "modulation index." [ optional details ]

The analogous musical term is "Vibrato," describing the sensation of slow pitch fluctuations.In (a), below, the bottom signal is an AM signal, resulting when the top sine signal modulates the amplitude of a high-frequency carrier sine signal (not shown).

In (b), a low-frequency sine (black) is modulating a high-frequency carrier sine (blue), resulting in the FM signal at the bottom (red).

(a)

(b)

Experiment with these AM & FM applets

Signal and spectral representations of amplitude-modulation rate and depth

Based on the above graphs, can you deduce the spectral correlates of the rate and depth parameters of amplitude modulation?

(answer just below)Sinusoidal amplitude modulation [with modulation rate fmod (in Hz) and modulation depth m (in %)] of a sine signal (with frequency f and amplitude A) results in a signal with

a) amplitude that fluctuates above/below A by an amount that depends on the modulation depth and at a rate equal to the modulation rate, and

b) spectrum that has three components; the original sine (f; A) and two sidebands, also determined by the modulation rate and depth:

_ a low-frequency sideband, flow = f - fmod - Alow = 1/2mA

_ a high- frequency sideband, fhigh = f + fmod - Ahigh = 1/2mA (Ahigh = Alow)

In other words, modulation depth defines the energy shared by the two sidebands as a percentage of the energy of the original sineFor example:

If the amplitude of a sine signal (f = 2000Hz and A = 4) is modulated at a rate fmod = 10Hz and depth m = 60% then

a) the resulting AM signal will have the same frequency (fAM = 2000Hz) but amplitude that fluctuates above/below A=4

at a rate equal to 10 times/second.

b) the spectrum of the resulting AM signal will have three components: the original sine (f = 2000Hz and A = 4) and two sidebands:

flow = f - fmod = 2000-10 = 1990Hz - Alow = 1/2mA = 0.5*0.6*4 = 1.2

fhigh = f + fmod = 2000+10 = 2010Hz - Ahigh = 1/2mA = 0.5*0.6*4 = 1.2

Loyola Marymount University - School of Film & Television